Blog

AI Automation with MLOps

MLOps stands for Machine Learning Operations. MLOps is a core function of Machine Learning engineering, focused on streamlining the process of taking machine learning models to production, and then maintaining and monitoring them. MLOps is a collaborative function, often comprising data scientists, devops engineers, and IT.

- MLOps allows data teams to achieve faster model development, deliver higher quality ML models, and faster deployment and production.

- MLOps enables vast scalability and management where thousands of models can be overseen, controlled, managed, and monitored.

- With risk mitigation in place from an early stage in the project lifecycle MLOps can help reduce the overall risk of a project by enabling faster feedback and resolution of issues.

Challenges with Productionizing AI

Productionizing machine learning is difficult because it requires many complex components such as data ingest, data prep, model training, model tuning, deployment, monitoring and explainability. It also needs collaboration and hand-offs between teams from Data Engineering to Data Science to ML Engineering. To keep all these processes synchronous naturally requires stringent operational rigor.

When it comes to automating AI, companies are struggling. It’s not just that they’re having trouble scaling their processes and implementing new technologies, but also with adopting them. As a result, the majority of enterprises have yet to implement AI in any meaningful way.

Approximately 10 percent of corporate artificial intelligence initiatives are actually implemented. This low and inefficient rate is due to two natural occurrences that have resulted in a variety of issues.

- AI applications in business are new. The pace of technological advancement in AI is much greater than the validated AI use cases. In the race to deliver value, designing automated solutions isn’t a high priority for a resource-limited enterprise.

- There is a clear gap in automation capabilities within AI. Data Science roles are extremely sought after still, but with the handover of every data science project, comes a further requirement for embedding the output in the business. As a result, skilled ML engineers and data engineers are scarce.

The solution: MLOps

The solution: MLOps, Machine Learning’s tailored approach to productionizing models.

MLOps is a combination of tools and practices aimed at helping you manage your data, models, and algorithms in production. It spans the entire data pipeline from ingestion through inference; it encompasses both batch and online workloads; it supports all types of ML models (linear regression, logistic regression, etc.), as well as decision trees or any other model type you can think of.

With MLOps, the process is standardized, steps are automated, goals are aligned and tooling meets the business requirements. An ML Ops solution provides:

- Process Automation: The deployment of models is automated for all models, making iterations and updates easy and quick.

- Process Standardisation: The elimination of hacking and short-cuts creates a business environment in which the end-to-end process architecture is transparent and traceable.

- Flexible Ways of Working: Pipelines that are dynamic and adaptable, combined with common goals amongst data scientists, engineers, and ops personnel create an environment conducive to success

- Sufficient Tooling: The tools used support the process and create a robust architecture, which supports the business need. The functionality of these tools enables compatibility, parallel working, speed of decision making and cost-efficiency.

MLOps does not offer a one-size-fits-all solution for building machine learning pipelines; instead it lets you tailor your deployment strategy based on business needs. For example, if your goal is to optimize sales efficiency by predicting customer lifetime value (LTV), then MLOps helps you build an end-to-end model portfolio optimized for this specific use case without requiring significant engineering resources or custom software development workflows.

What are the components of MLOps?

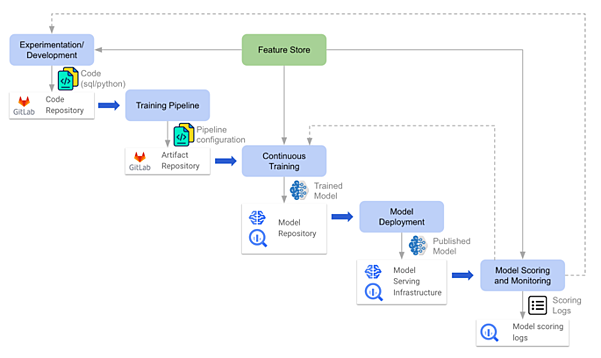

Figure 1: Components of MLOps system

There are many components that go into a successful MLOps solution. The following is a list of some key components:

- A version control system to store, track, and version changes to Machine learning/Data Science code.

- A version control system to track and version changes to training/testing datasets.

- A network layer that implements the necessary network resources to ensure the MLOps solution is secured.

- An ML-based workload to execute machine learning tasks.

- Infrastructure as code (IaC) to automate the provisioning and configuration of cloud-based ML workloads and other IT infrastructure resources.

- An ML (training/retraining) pipeline to automate the steps required to train/retrain and deploy ML models.

- An orchestration tool to orchestrate and execute automated ML workflow steps.

- A model monitoring solution to monitor production models’ performance to protect against both model and data drift. The performance metrics can also be used as feedback to help improve the models’ future development and training.

- A model governance framework to make it easier to track, compare, and reproduce Machine Learning experiments and secure ML models.

- A data platform to store datasets.

What are the benefits of MLOps?

The primary benefits of MLOps are efficiency, scalability, and risk reduction.

Efficiency: MLOps allows data teams to achieve faster model development, deliver higher quality ML models, and faster deployment and production.

Scalability: MLOps also enables vast scalability and management where thousands of models can be overseen, controlled, managed, and monitored for continuous integration, continuous delivery (CD), and continuous deployment (CI/CD). Specifically, MLOps enables the following:

- Automated deployment of models to a variety of machines and environments.

- Scalable management and monitoring of model performance, including real-time insights into model accuracy and runtime metrics for optimized execution.

- Parallel processing across multiple cores, GPUs, or other compute resources for increased speed and efficiency when training or deploying models.

- Improved collaboration between data scientists, engineers, ops personnel due to standardized process steps and consistent goals throughout the organization

Risk Reduction: With risk mitigation in place from an early stage in the project lifecycle (e . g. data quality, uncertainty assessment), MLOps can help reduce the overall risk of a project by enabling faster feedback and resolution of issues.

What are the best practices for MLOps?

Here are some best practices for implementing MLOps in your organization:

- Establish a dedicated team to manage your ML pipeline. This team should be responsible for developing and maintaining the pipeline, as well as monitoring model accuracy and performance.

- Use version control to track changes to your data sets and models. This will help you troubleshoot errors and ensure that your models are always up-to-date.

- Version control is a powerful tool that can be used to track changes to data sets and models. This will help you troubleshoot errors and ensure that your models are always up-to-date.

- Automate testing and deployment procedures using scripts or tools like Puppet or Chef . This will help you streamline the process of bringing new models into production, reducing the risk of human error.

- Create dashboards or reports that show how your models are performing over time . This will help you identify any issues early on, before they have a negative impact on your business.

At Aegasis Labs, we have world-class ML Engineers dedicated to building MLOps solutions. Our ML Engineers can productionize your AI initiatives, using an MLOps approach, and have done this for many leading companies. Check out our latest case study with Novai to find out more.